Blazor WASM SEO - Use pre-rendering to solve the problem that SPA web SEO (Prerender.io & Cloudflare Workers tutorial)

Blazor WASM Preparatory work (can be skipped when using front-end frameworks such as Vue and React)

🔗Basically, in order for search engines to obtain data correctly, Open Graph must be added to the <head> of each page.

For detailed information, please refer to The Open Graph protocol, The implementation method of Blazor WASM is provided directly here. For easier access, the entire Open Graph can be made into an object.

Add a Razor component in Client > Shared with the following code (can be changed according to needs):

C#<PageTitle>@title</PageTitle>

<HeadContent>

<meta property="og:title" content="@title">

<meta property="og:type" content="website">

<meta property="og:url" content="@url">

<meta property="og:description" content="@description">

<meta property="og:image" content="@image">

<meta property="og:image:alt" content="Shop">

<meta name="twitter:card" content="summary_large_image">

<meta name="twitter:site" content="@your twitter">

<meta name="author" content="alvin">

<meta name="description" content="@description">

<meta name="locale" content="zh-tw">

<meta name="scope" content="Accessories">

</HeadContent>

@code {

[Parameter] public string title { get; set; } = string.Empty;

[Parameter] public string url { get; set; } = string.Empty;

[Parameter] public string description { get; set; } = string.Empty;

[Parameter] public string image { get; set; } = string.Empty;

}

After the construction is completed, just add this object to the page you want to be searched (the parameters change according to different pages).

C#<C_SEO title="@pageTitle" description="@product.Description" image="@product.ImageUrl" url="@NavigationManager.Uri" />

After viewing the source code on the page, you can see the customized Open Graph in <head>. I originally thought this would solve the problem, but if I search through a browser or share a link through social media, I find that the crawler cannot find the custom Open Graph!?

Problem

🔗SPA (Single Page Application) websites built using front-end frameworks such as Vue and React. Compared with traditional static websites, it uses JavaScript to dynamically render content. This allows users to not need to re-enter the website every time. Load the entire page, but only update the required information and graphics when the page switches. This mechanism not only improves performance, but also allows users to interact more fluidly between pages, as page transitions are faster and smoother. Single-page applications effectively optimize the user experience by dynamically updating content on the client side.

However, this method cannot display the actual content of the website because the crawler does not wait for the dynamically rendered content to appear when the browser is searching. If you use Blazor WASM to build a website, no matter what screen you see on the search engine, you will see the content of index.html. as follows:

Html<div id="app">Loading...</div>

<div id="blazor-error-ui">

An unhandled error has occurred.

<a href="" class="reload">Reload</a>

<a class="dismiss">🗙</a>

</div>

Solution

🔗To solve this problem so that the crawler can find the correct information, you have to use pre-rendering technology, which mainly stores the information of each screen in advance and displays it directly when the crawler robot crawls the website.

Pre-rendering can use the service provided by Prerender.io to cache the actual content of the website in advance, and then use Middleware to determine whether the person reading the website is a general user or a crawler robot. Middleware can builted by Cloudflare Workers.

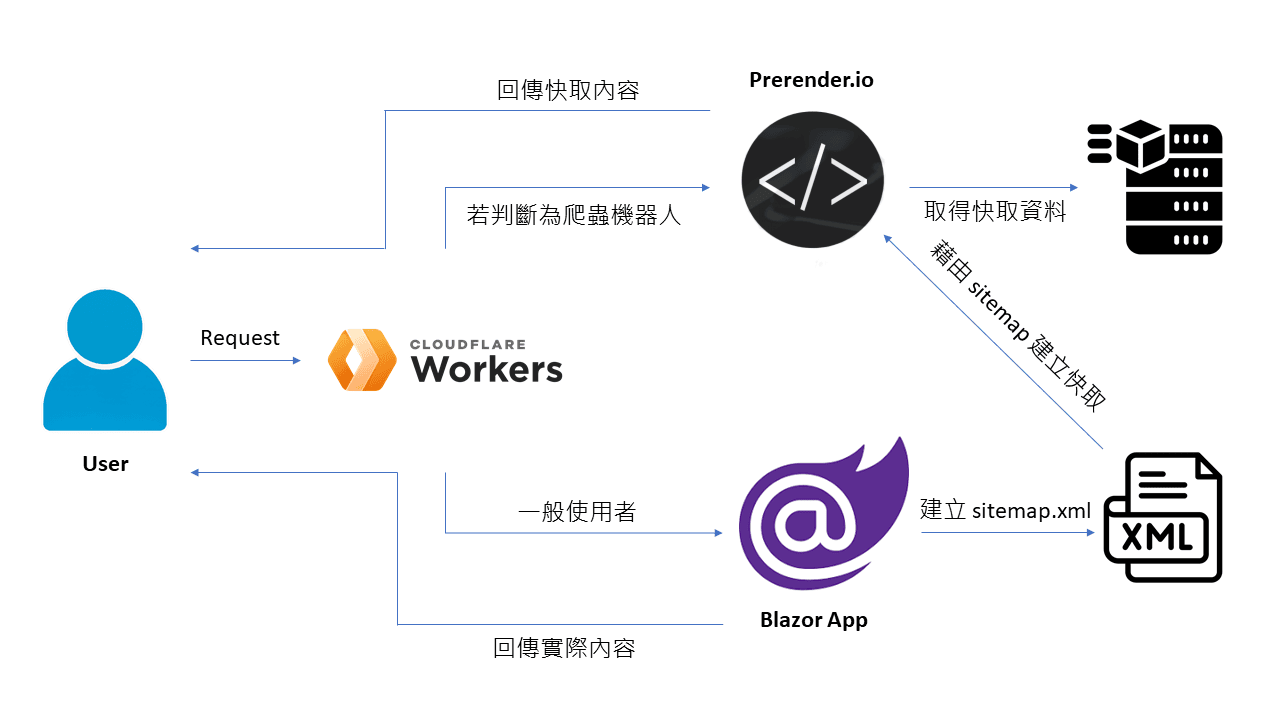

System diagram:

Cloudflare Workers

🔗Cloudflare Workers is a service provided by Cloudflare that allows developers to execute and deploy JavaScript or WebAssembly code on Cloudflare's globally distributed edge network.

And we can use Cloudflare Workers to implement a Middleware to determine whether the request sent is a crawler robot or a general user.

If you want to use Cloudflare Workers, you must first let Cloudflare host your website domain name. The current project originally used Godaddy to purchase the domain name. You need to go to Cloudflare to add a new website first (Choose the free plan). If your website already has a DNS record in Godaddy, that is It will be automatically added. After the addition is successful, you can get the customized name server URL, and then go to the name server of Godaddy DNS to modify it.

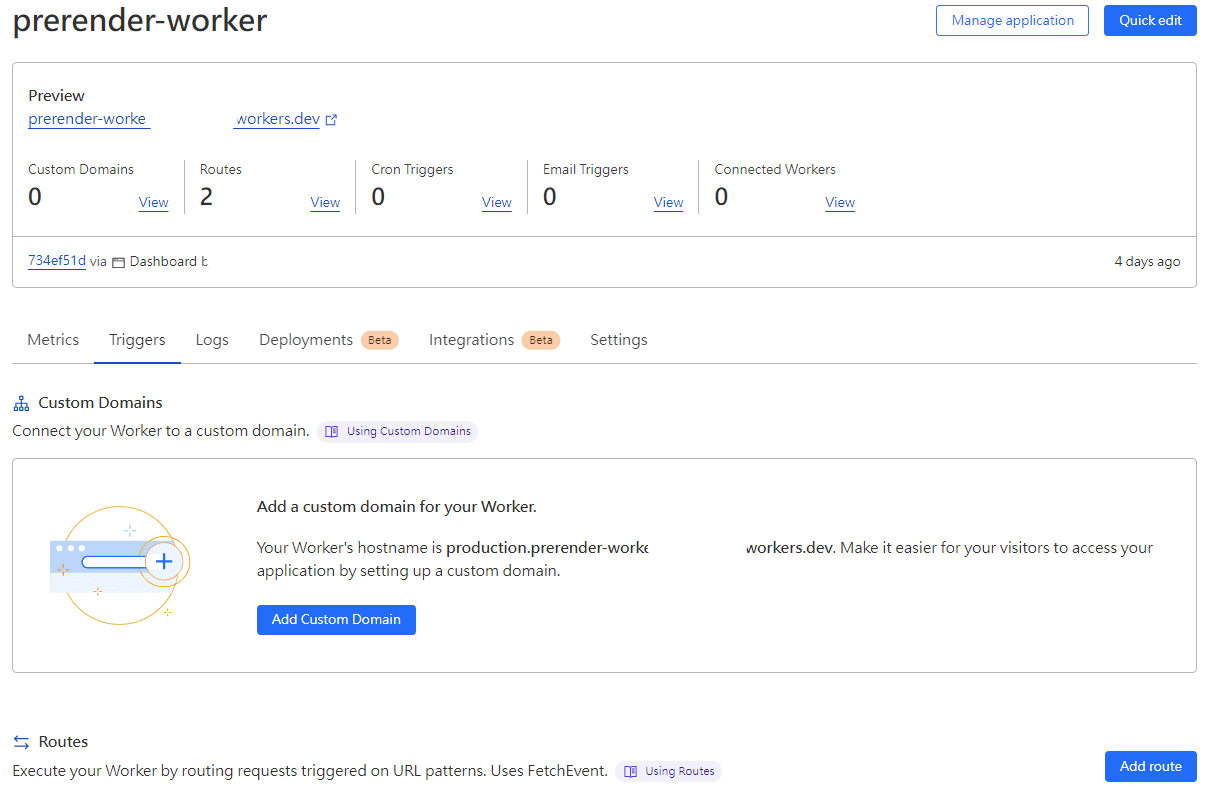

Deploy Prerender worker at Cloudflare

🔗-

Click on the left function bar (Workers & Pages) from the home page.

-

Create a Worker and publish it. The first time you build the Worker, it will provide an example of Hello world. You can create it directly first, and then can modify the content inside after.

-

Next, you can directly copy and paste the code that Prerender.io provides Cloudflare as Middleware, and click

Save and Deployto complete. (Prerender.io selects Cloudflare in the connection method to view this code)

JS// User agents handled by Prerender

const BOT_AGENTS = [

"googlebot",

"yahoo! slurp",

"bingbot",

"yandex",

"baiduspider",

"facebookexternalhit",

"twitterbot",

"rogerbot",

"linkedinbot",

"embedly",

"quora link preview",

"showyoubot",

"outbrain",

"pinterest/0.",

"developers.google.com/+/web/snippet",

"slackbot",

"vkshare",

"w3c_validator",

"redditbot",

"applebot",

"whatsapp",

"flipboard",

"tumblr",

"bitlybot",

"skypeuripreview",

"nuzzel",

"discordbot",

"google page speed",

"qwantify",

"pinterestbot",

"bitrix link preview",

"xing-contenttabreceiver",

"chrome-lighthouse",

"telegrambot",

"integration-test", // Integration testing

"google-inspectiontool"

];

// These are the extensions that the worker will skip prerendering

// even if any other conditions pass.

const IGNORE_EXTENSIONS = [

".js",

".css",

".xml",

".less",

".png",

".jpg",

".jpeg",

".gif",

".pdf",

".doc",

".txt",

".ico",

".rss",

".zip",

".mp3",

".rar",

".exe",

".wmv",

".doc",

".avi",

".ppt",

".mpg",

".mpeg",

".tif",

".wav",

".mov",

".psd",

".ai",

".xls",

".mp4",

".m4a",

".swf",

".dat",

".dmg",

".iso",

".flv",

".m4v",

".torrent",

".woff",

".ttf",

".svg",

".webmanifest",

];

export default {

/**

* Hooks into the request, and changes origin if needed

*/

async fetch(request, env) {

return await handleRequest(request, env).catch(

(err) => new Response(err.stack, { status: 500 })

);

},

};

/**

* @param {Request} request

* @param {any} env

* @returns {Promise<Response>}

*/

async function handleRequest(request, env) {

const url = new URL(request.url);

const userAgent = request.headers.get("User-Agent")?.toLowerCase() || "";

const isPrerender = request.headers.get("X-Prerender");

const pathName = url.pathname.toLowerCase();

const extension = pathName

.substring(pathName.lastIndexOf(".") || pathName.length)

?.toLowerCase();

// Prerender loop protection

// Non robot user agent

// Ignore extensions

if (

isPrerender ||

!BOT_AGENTS.some((bot) => userAgent.includes(bot)) ||

(extension.length && IGNORE_EXTENSIONS.includes(extension))

) {

return fetch(request);

}

// Build Prerender request

const newURL = `https://service.prerender.io/${request.url}`;

const newHeaders = new Headers(request.headers);

newHeaders.set("X-Prerender-Token", env.PRERENDER_TOKEN);

return fetch(new Request(newURL, {

headers: newHeaders,

redirect: "manual",

}));

}

The code is mainly a service that handles HTTP requests. Use the User-Agent and file extensions to determine whether the Prerender service should be used.

- Next, return to the preview screen of this worker, click

Triggers, clickAdd routebelow, and fill in the route of the website such as*your-domain.com/*and which website the Zone belongs to, and finally ClickAdd routeto save.

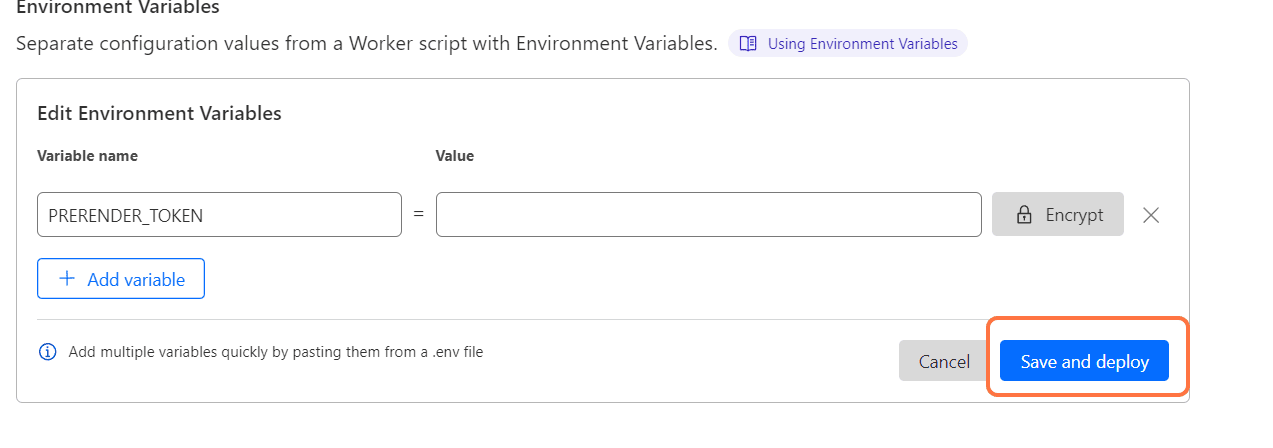

- The next step is to add the Prerender Token to the environment variables. Click

Settings>Variables>Add Variable. And copy and paste the Token provided by Prerender.io.

!!The variable name must be PRERENDER_TOKEN.

After pressing save, the Prerender worker will run smoothly.

After completing the above steps, you can upload the Sitemap to Prerender.io for crawlers to crawl and then cache.

Conclusion

🔗The current free limit of Prerender.io is 1,000 pre-renderings per month, which is enough for small or infrequently changed websites. It is also relatively easy to use this method to solve the problems encountered in SPA web SEO. , after all, there is no need to modify the original project code. However, after this experience, the next development project will have to consider the selected framework and method based on whether there is a need for SEO. After all, if there are too many projects and the service starts charging, it will lead to unaffordable cost😶.

Reference

Alvin

Software engineer who dislikes pointless busyness, enjoys solving problems with logic, and strives to find balance between the blind pursuit of achievements and a relaxed lifestyle.